Sora, OpenAI’s new AI video generation platform, which finally launched on Monday, is a surprisingly rich platform that offers simple tools for almost instantly generating shockingly realistic-looking videos. Even in my all-too-brief hands-on, I could see that Sora is about to change everything about video creation.

OpenAI CEO Sam Altman and company were wrapping up their third presentation from their planned “12 Days of AI,” but I could scarcely wait to exit that live and, I assume, not AI-generated video feed to dive into today’s content-creation-changing announcement.

Ever since we started seeing short Sora clips created by chosen video artists and shared by OpenAI, I and anyone with even a passing interest in AI and video have been waiting for this moment: our chance to touch and try Sora.

Spoiler alert: Sora is stunning but also so massively overloaded I couldn’t create more than a handful of AI video samples before the system’s servers barked that they were “at capacity.” Even so, this glimpse was so, so worth it.

Sora is important enough that its generative models do not live inside the ChatGPT large language model space or even inside OpenAI’s homepage. The AI video-generation platform warrants its own destination at Sora.com.

From there, I logged into my ChatGPT Plus account (you need at least that level to start creating up to 50 generations a month; Pro gets you unlimited). I also had to provide my age (the year is blurred because I am vain).

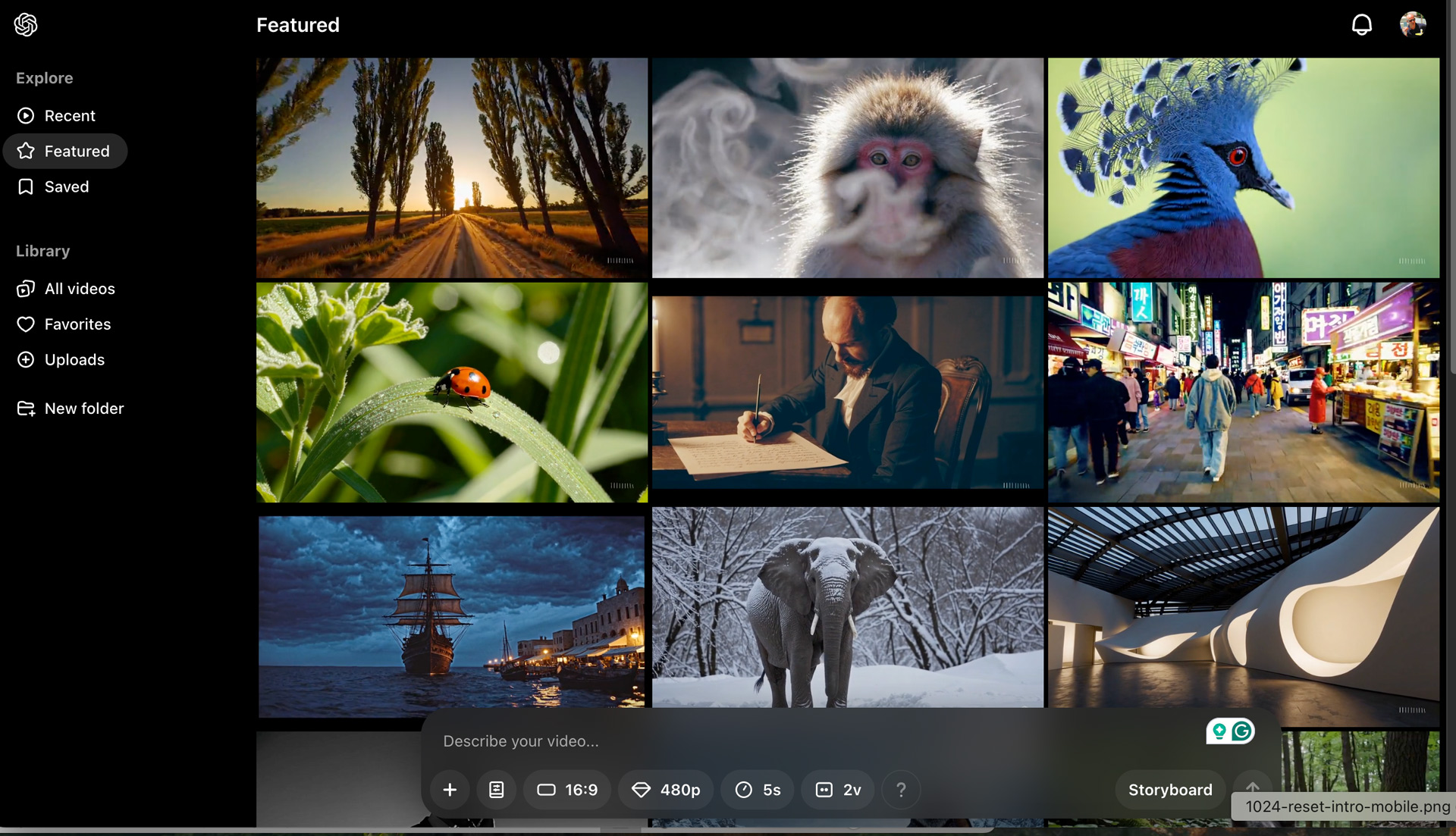

The landing page is, as promised, a library grid of everyone else’s AI-generated video content. It’s a great place to seek inspiration and to see the stunning realism and surrealism capable through OpenAI’s Sora models. I could even use any of those videos as a starting point for my creation by “Remixing” one of them.

I chose, though, to generate something new.

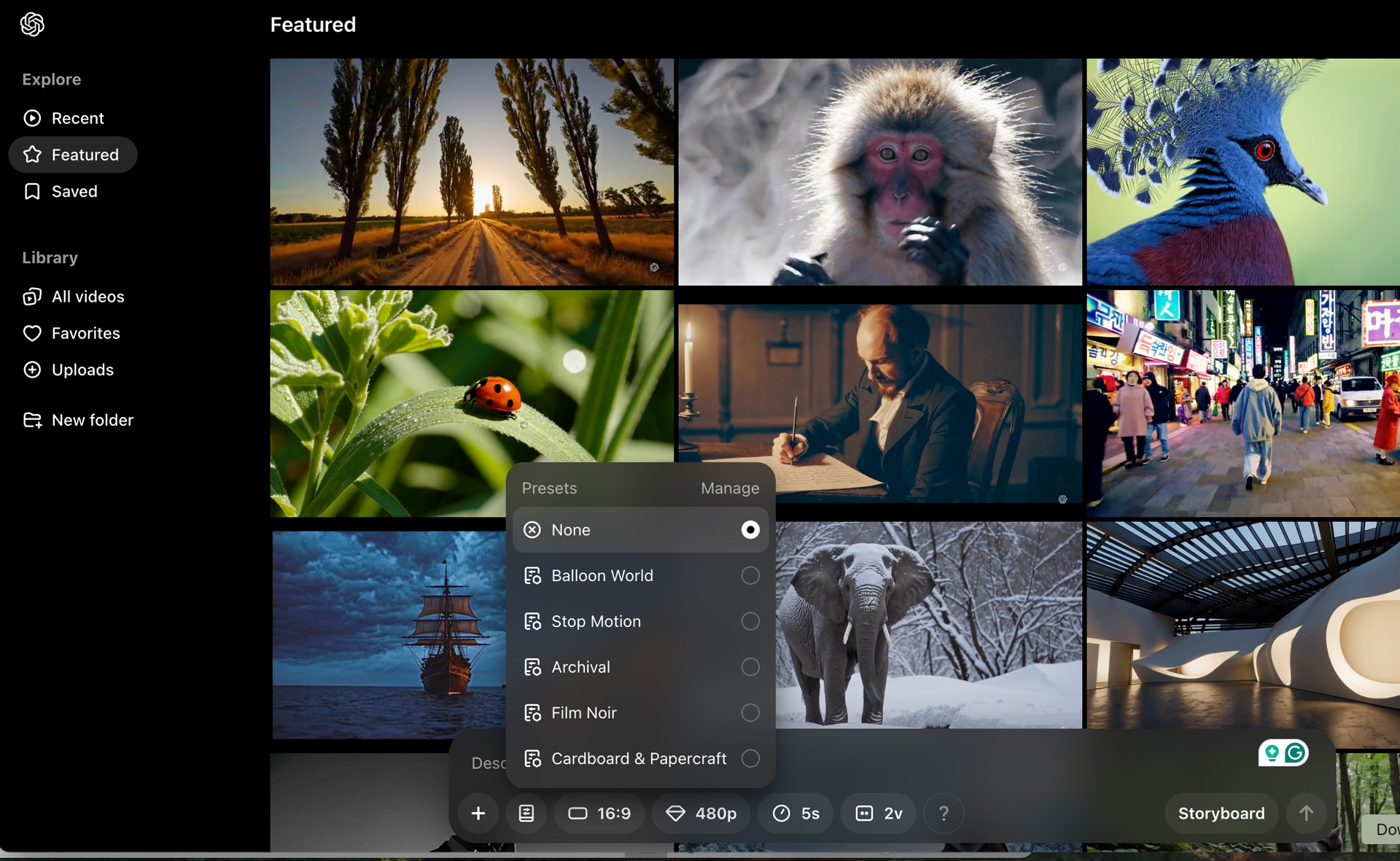

There is a prompt field at the bottom of the page that lets you describe your video and set some parameters. That field includes options like the aspect ratio, resolution, duration, and the number of video options Sora would return for you to choose from. There’s also a style button that includes options like “Balloon World,” “Stop Motion,” and “Film Noir.”

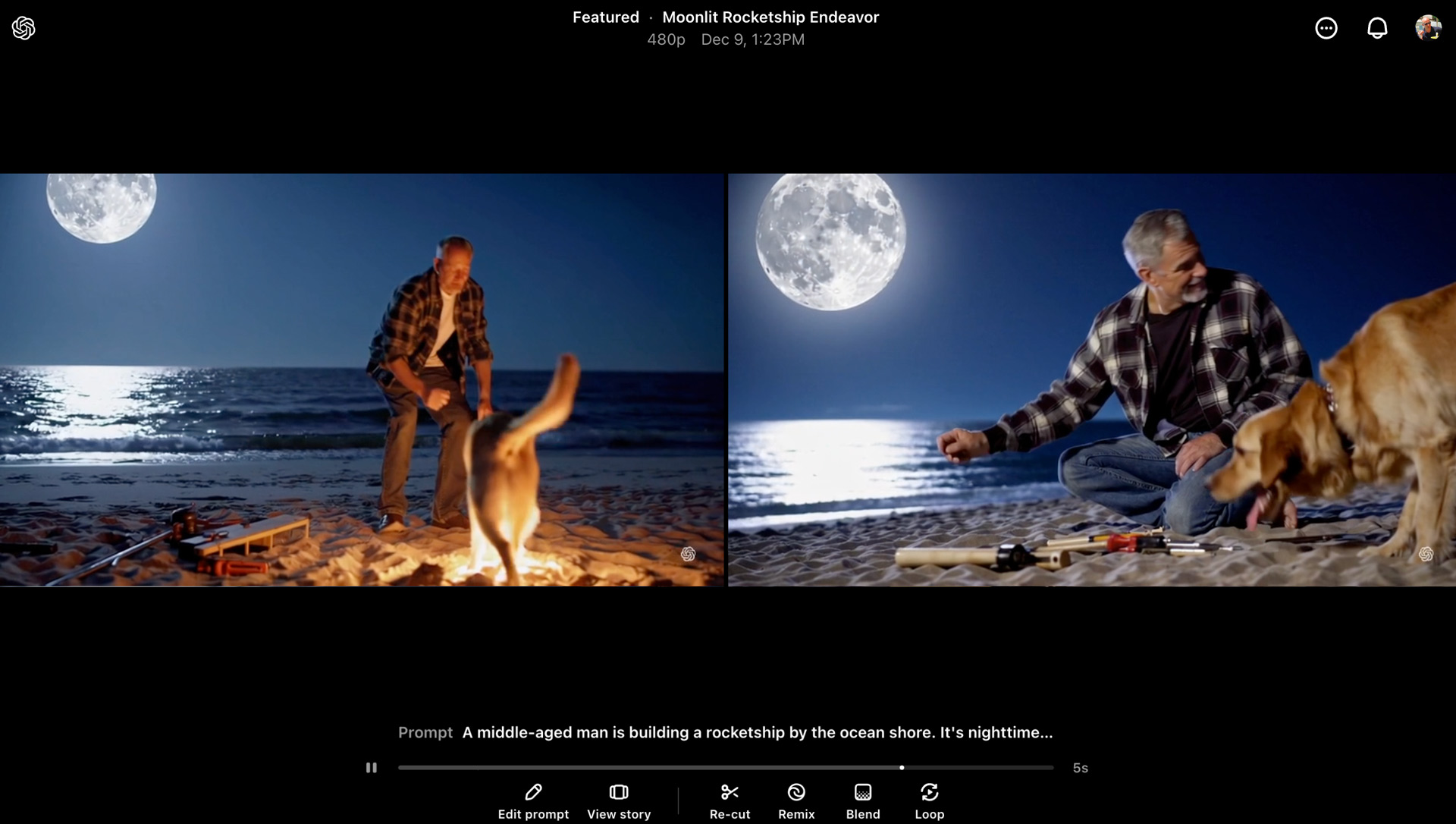

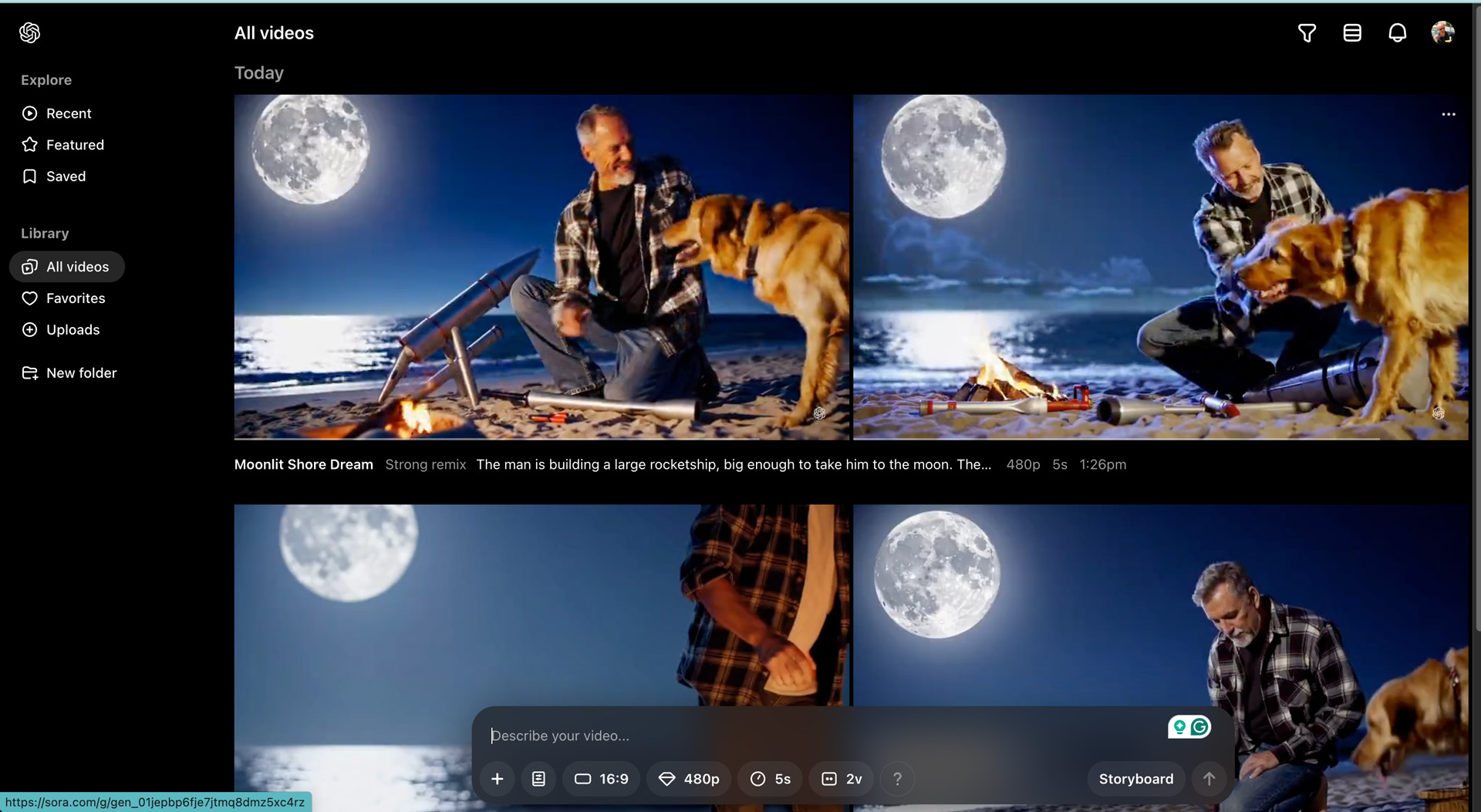

I’m a fan of film noir and am intrigued by the idea of “Bubble World,” but I didn’t want to hamper the speed in any way, so I instead started typing in my prompt. I asked for something simple: A middle-aged guy building a rocketship near the ocean and under a moonlit sky. There’d be a campfire nearby and a friendly dog. It was not a detailed description.

I hit the up arrow on the right-hand side of the prompt box, and Sora got to work.

Within about a minute, I had two five-second video options. They looked realistic. Well, at least one of them did. One clip featured a golden retriever with an extra tail where its head should’ve been. Over the course of the video’s 5-second runtime, the extra tail did become a head. The other video was less distressing. In fact, it was nearly perfect. The problem was the rocket ship – it was a model and not something my character could fly in.

At this point, I could edit my prompt and try again, view the video’s storyboard, blend it with a different video, loop it, or remix it. I chose the video with the normal dog and then selected remix.

You can do a light remix, a subtle one, a strong one, or even a custom remix. My system defaulted to a strong remix, and I asked for a larger rocket, one large enough to take the man to the moon. I also wanted it repositioned behind him and finally asked for the campfire to be partially visible.

The remix took almost five minutes, resulting in another beautiful video. Sure, Sora knows nothing about spaceflight or rocket science, but it got the composition right, and I can imagine how I could nudge this video in the right direction.

In fact, that was my plan, but when I tried another remix, Sora complained it was at capacity.

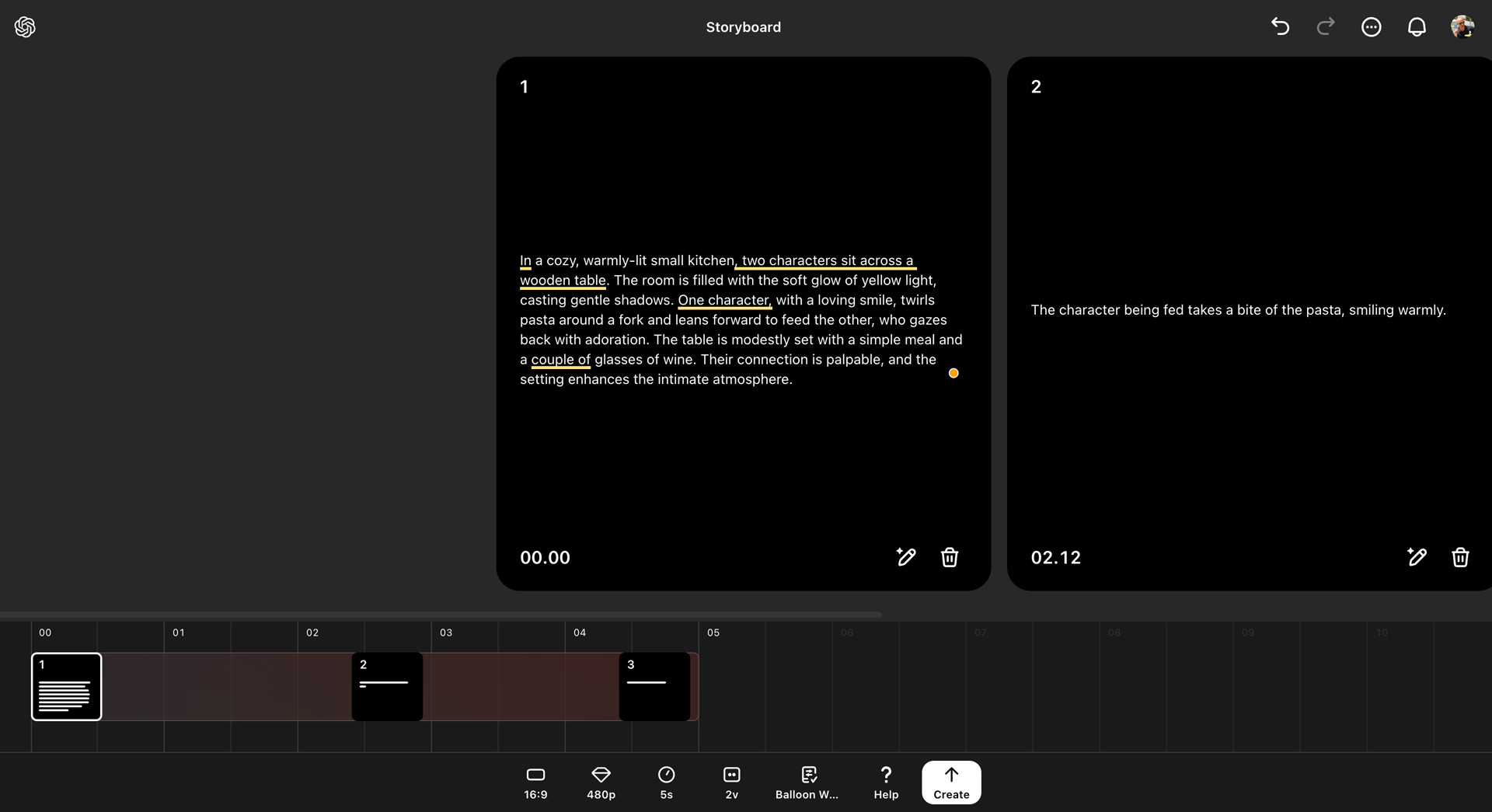

I also tried using Storyboard to create another video. In this case, I entered a prompt that became the first board in my storyboard; Sora automatically interpreted this and then let me add additional beats to the video via additional storyboards. I had a video in mind of a “Bubble World” scene with two characters sharing a romantic pasta dinner, but again, Sora was out of capacity.

I wanted to try more and see, for instance, how far you could take Sora; OpenAI said they’re starting off with “conservative” content controls. Which may mean things like nudity and violence would be rejected outright. But you know, AI prompt writers always know how to get the best and worst out of generative AI. I think we’ll just have to wait and see what happens on this front.

Server issues aside, it’s clear Sora is set to turn the video creation industry on its head. It’s not just its uncanny ability to take simple prompts and create realistic videos in a matter of minutes; it’s the wealth of video editing and creation tools available on Day 1.

I guarantee you the model will get more powerful, the tools even smarter, and servers more plentiful. I don’t know exactly what Sora means for video professionals worldwide, but the sooner they try this, the faster they’ll get ready for what’s to come.